Your Reading Insights Are Leaking — And You're Paying for the Privilege

You've built the system. Obsidian vault, Readwise account, Notion database, maybe a Drafts inbox. You highlight, you clip, you annotate. Your second brain is real and it's growing.

But there's a hole in it you've probably stopped noticing because you've accepted it as permanent.

Everything you absorb while listening disappears.

The insight that hit you during your morning commute while an audiobook was playing. The passage you wanted to save while folding laundry with your AirPods in. The moment a narrator's emphasis made a sentence land differently than it reads on the page. Gone. You can't highlight audio. You can't clip a timestamp into Obsidian. The audio side of your reading life has always been a black hole in the knowledge pipeline you've otherwise built so carefully.

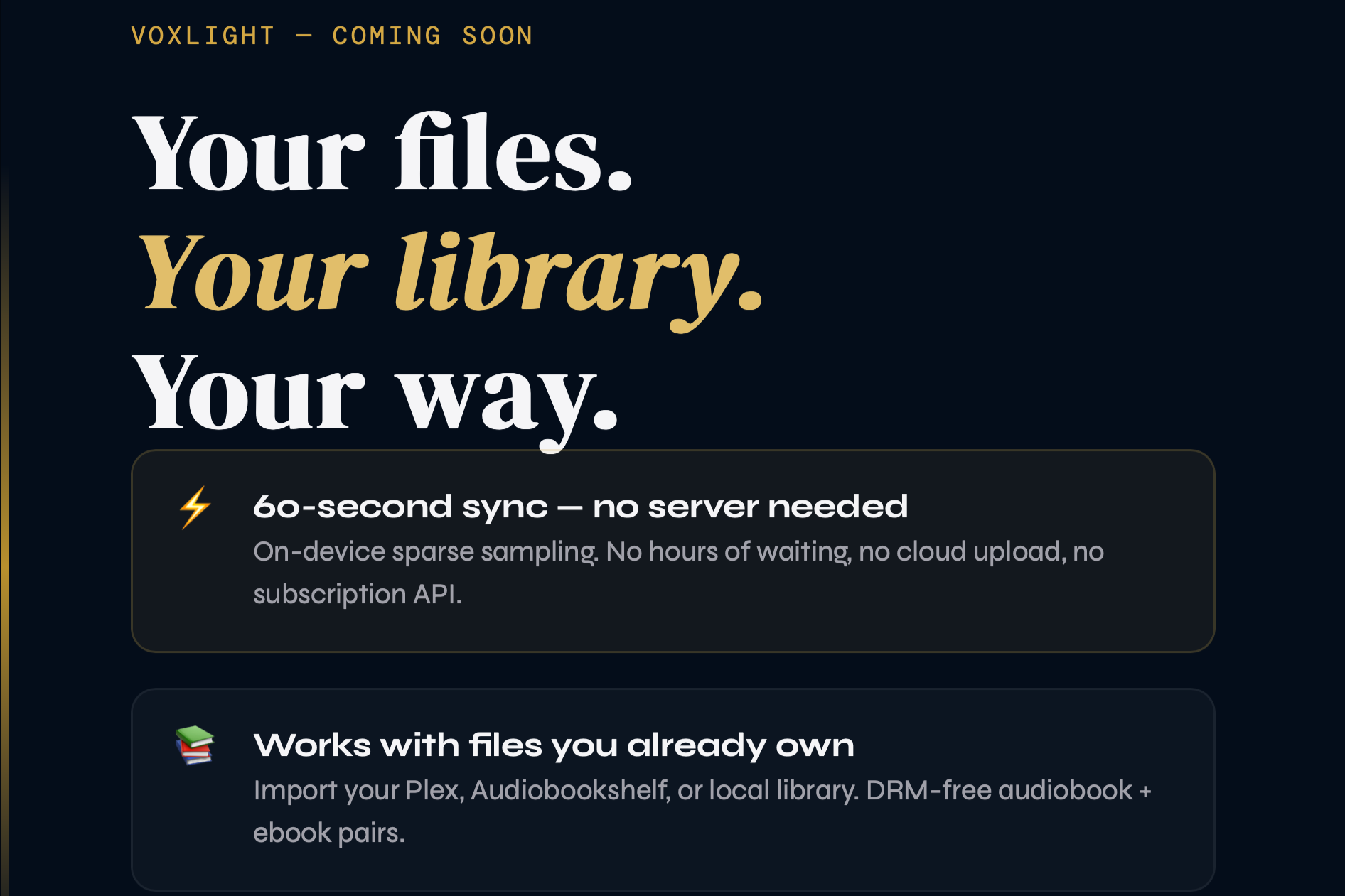

This is the problem VoxLight was built to solve.

The Capture Problem Nobody Talks About

The productivity and PKM community has spent years perfecting the processing side of knowledge work. Zettelkasten, progressive summarization, spaced repetition, linked thinking — there's an entire literature on what to do with ideas once you have them.

The capture side gets less attention, maybe because it seems solved. You highlight in your ebook reader. Readwise pulls it. The note lands in Obsidian. Pipeline complete.

Except it isn't. Because that pipeline only works if you're reading. The moment you switch to listening — which, for most people who commute, exercise, cook, or do anything with their hands — the pipeline breaks. You're consuming content without any mechanism to capture what matters.

Audiobook listeners have developed workarounds. You probably have your own version: pause the book, open a notes app, type something approximate before you forget it, resume. Or use voice memos. Or just accept the loss and hope the insight comes back during active reading.

These are all the same workaround: manually bridging a gap that shouldn't exist.

What Audio-Text Synchronization Actually Means

VoxLight syncs your audiobook and ebook so they're the same object in the same app. When you're listening, you can see the text scrolling in sync with the narration. When you switch to reading, you pick up exactly where the audio left off — not approximately, not close enough, exactly.

The technical approach matters here: VoxLight samples twenty 10-second clips from your audiobook, transcribes them on-device using Apple's speech recognition, and uses those as anchor points to align the full text. The whole process takes under 60 seconds. No server. No API. No upload. Your files stay on your device throughout.

This means the highlight you want to save while listening is now just a highlight — the same gesture you'd make while reading. Tap the passage. It's saved with the audio timestamp. The pipeline that was broken is now continuous.

The Apple Intelligence Layer

Here's where it gets interesting for knowledge workers specifically.

Apple Intelligence runs on-device. Summarization, semantic search, writing tools — all processed locally on your iPhone or iPad, all free, all private by architecture rather than by policy.

Most highlight aggregation tools — Readwise being the obvious example — require your reading data to live on their servers to do anything intelligent with it. Their AI features, their spaced repetition, their semantic search: all cloud-processed. For casual users this is fine. For people who've deliberately built self-hosted infrastructure, who run Plex instead of Netflix and Audiobookshelf instead of Audible, it's a quiet contradiction at the center of their setup. You've taken ownership of your media. Your reading behavior — what you highlight, how often, which passages — is still being logged somewhere you don't control.

VoxLight's integration with Apple Intelligence closes that loop. The highlights you capture — synchronized to the exact audio moment that produced them — can be processed, organized, and made queryable entirely on your own hardware. No API costs. No subscription tier for intelligence features. No data sovereignty compromise.

What This Makes Possible

VoxLight will expose native Apple Shortcuts actions — not the generic set that an outside developer imagines readers need, but the set that emerges from actually living inside the problem for years. Combined with Apple Intelligence on-device processing, this enables a reading workflow that doesn't currently exist:

You capture a highlight while listening. Apple Intelligence processes it on your device — extracts themes, identifies connections to your existing highlights, generates a summary if you want one. A Shortcut you've built routes the processed highlight to Obsidian or Notion or Drafts with full metadata intact. A month later, you can query your own highlights semantically — "what have I been reading about attention and distraction?" — and get a synthesized answer that never touched a third-party server.

The pipeline that was broken at the audio capture step is now not only complete but intelligent, and the intelligence runs where your data lives: on your own device.

For the PKM-Minded Reader

If you've invested in building a second brain, VoxLight isn't a replacement for the tools you're already using. It's the input layer those tools were missing.

Readwise is excellent at what it does. Obsidian is excellent at what it does. The gap was always upstream — at the moment of capture, before the highlight exists to be processed. VoxLight fills that gap, and because it integrates with Apple Intelligence on-device, it fills it without asking you to compromise on the data sovereignty you've been building toward.

Your reading data should belong to you. All of it. Including the parts you absorbed while listening.

VoxLight is currently in development with beta launching March 2026. Sign up for early access at voxlight.app.